Create a Copywriting Assistant with Locofy.ai and ChatGPT

Goal: Explore how AI-powered tools can generate frontend code and also extend it.

This guide will take you step-by-step through the process of converting your Figma designs into Next.js code using Locofy.ai and then extending it and adding AI features using ChatGPT and OpenAI API.

Prerequisites:

- You will need your design ready in Figma.

- You will need a Locofy.ai account and have the plugin up and running on your Figma file.

- Your Locofy project must be configured to use Next.js.

- You will need an OpenAI account.

Turn Your Designs Into Next.js Code With Locofy.ai

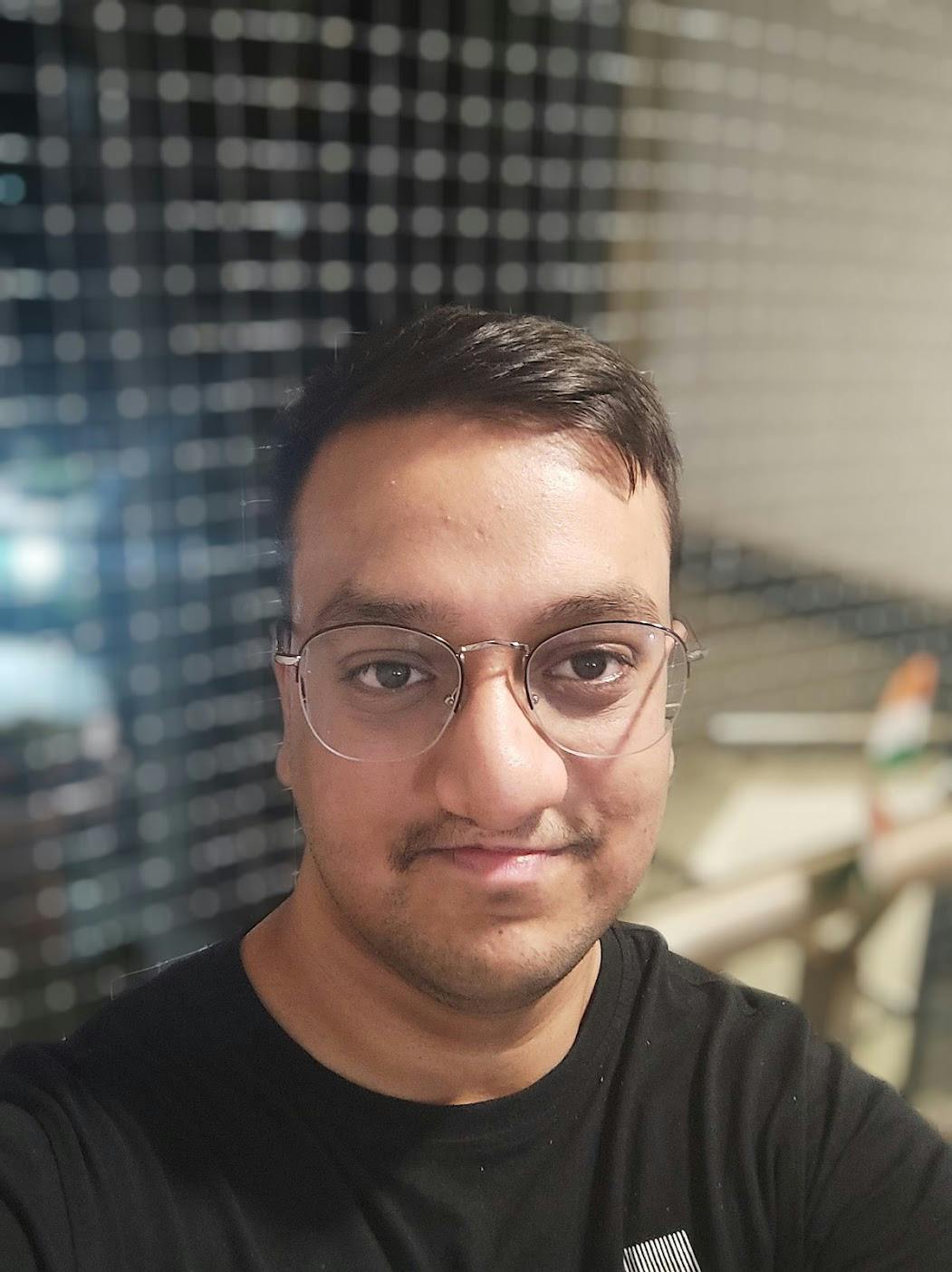

This is the design file that we’ll be using in this guide. It is a single-page design with two text areas to collect inputs and output results.

The first step is to tag our designs. Tagging allows us to turn our designs into working components, for example, buttons, dropdowns, inputs, and more.

You can also work with popular UI libraries such as Material and Bootstrap by tagging your elements.

For this app, you’ll notice items such as:

- Buttons

- Inputs

Now you can make your designs responsive by configuring the styles in the Styles & Layouts tab.

Select your components that need to be responsive and on the Locofy plugin, under the Styles & Layouts tab, you will find different media queries & states. Select the breakpoint you want to make your design responsive to and then change the styles and layout accordingly.

You can also use auto layout for your designs for responsiveness and use the Locofy plugin to take care of layouts on different screen sizes.

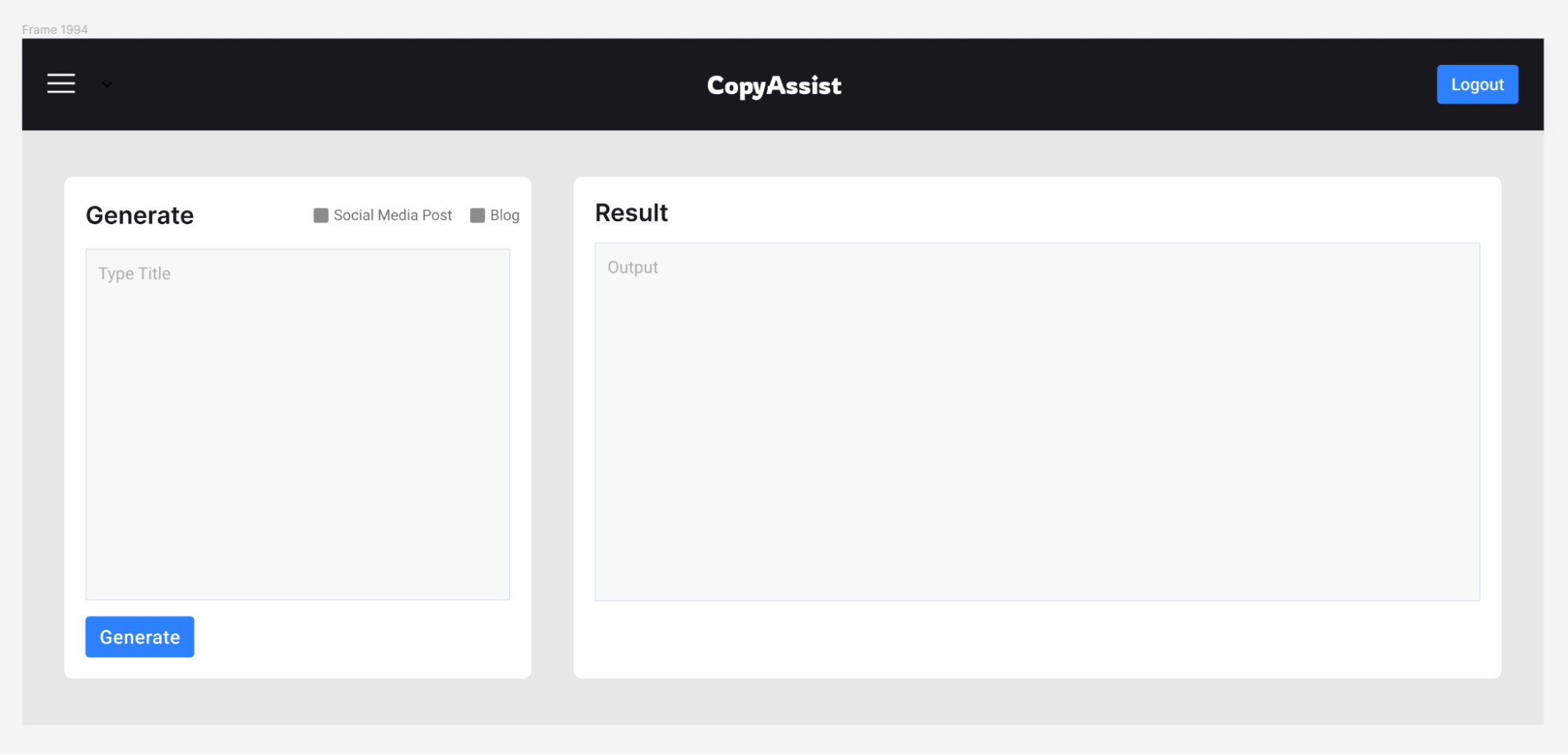

You can trigger actions to build fully featured apps and websites. You can head over to the ‘Actions’ tab and assign actions for click events.

You can scroll into view and open popups and URLs amongst other actions.

Now that our designs are tagged correctly and responsive, we can preview them.

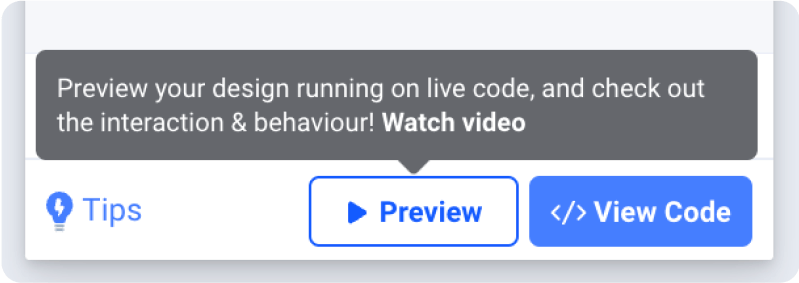

To preview your design, select the frame or layer that you want to view, and click the “Preview” button.

Once you are satisfied with your preview, you can sync your designs to Locofy Builder to add more advanced settings, share your live prototype, and export code.

Click the “View Code” button at the bottom right corner of the plugin & select the frames. Once the sync is complete, click on “View code in the Builder”.

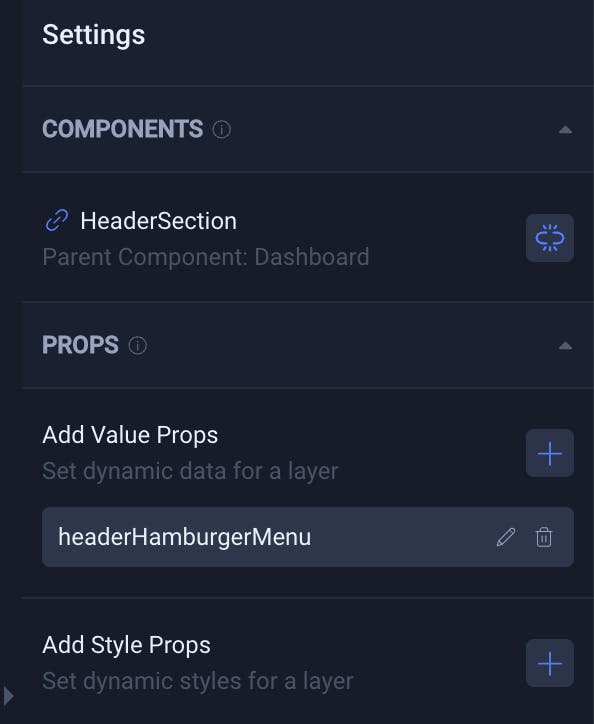

You can turn code generated by Locofy into components inside the Locofy Builder using Auto Components. This allows you to easily extend and reuse the code.

In our app, we can turn our header section into a component. You can quickly add props as well using the Locofy builder.

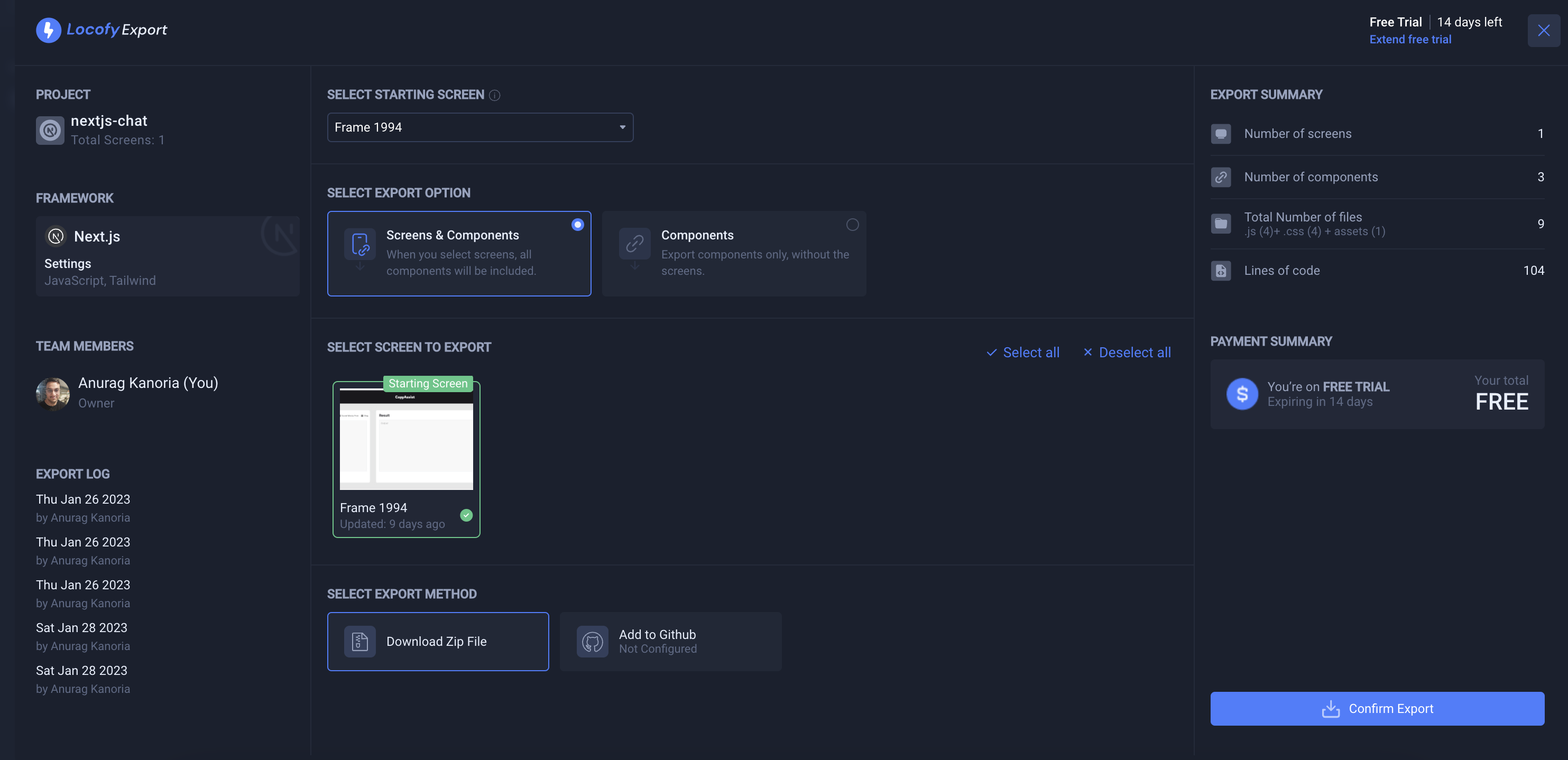

Once the prototype matches the product vision, you can view the live responsive prototype that looks and feels like your end product. Then you can export the code.

Make sure to select all the screens you need, as well as the correct framework and project settings. Once done, you can download your code in a Zip File by clicking the “Confirm Export” in the bottom right corner.

Exported Code Overview

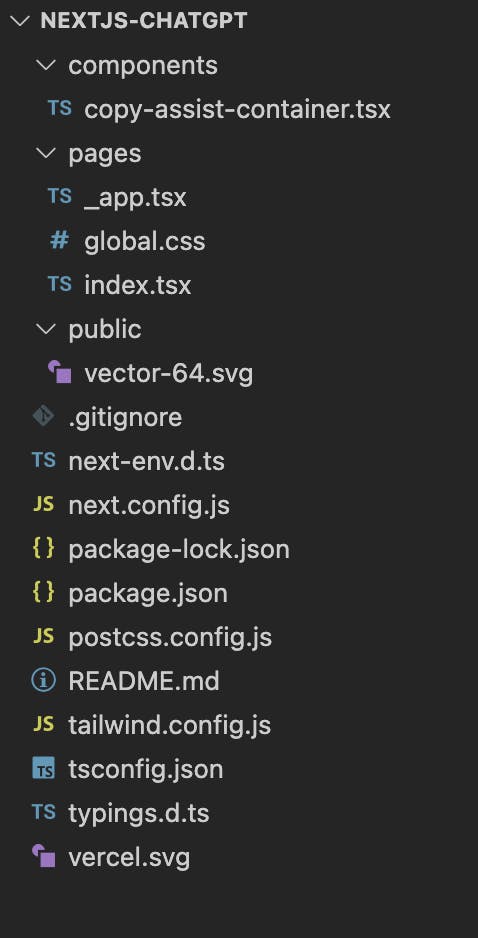

After extracting the zip file you downloaded, you can see how the component and page files are structured.

We have the UI components in the “components” folder, our routes in the “pages” folder, and our media assets in the “public” folder.

Extending Code with ChatGPT

Log into the ChatGPT account by clicking the ‘Login’ button present in the center. If you don’t have an account, click on ‘Sign up’.

Now you will be redirected to the application where you can interact with ChatGPT.

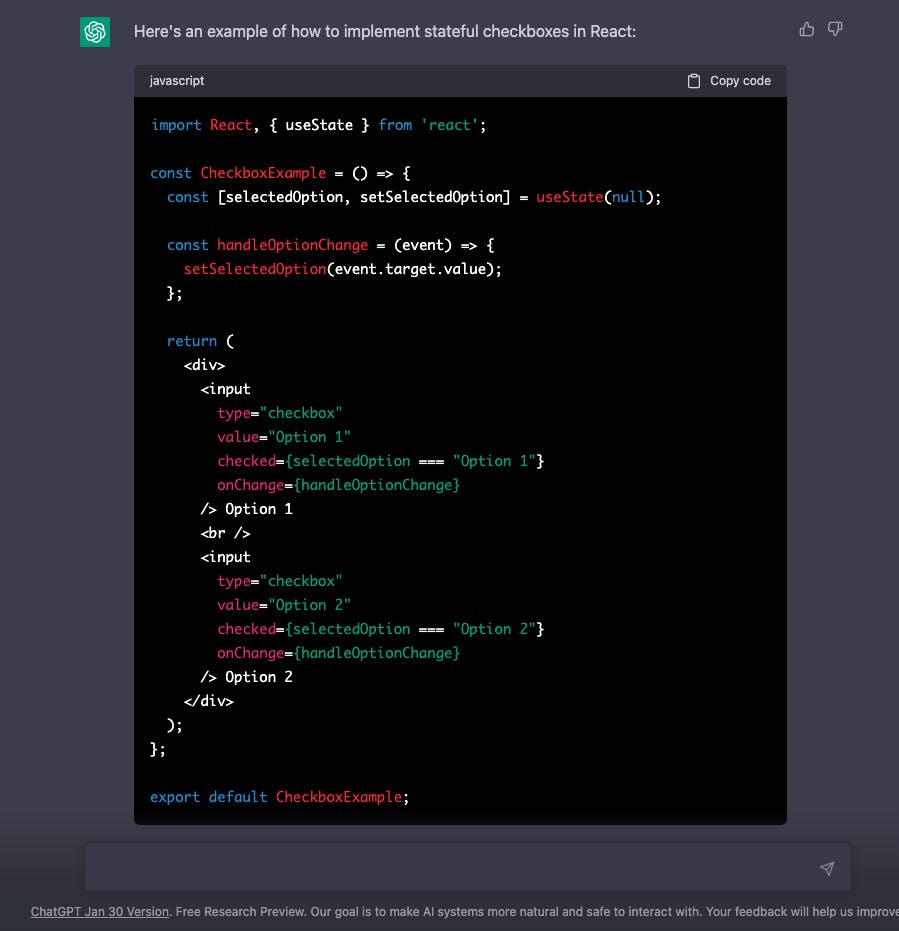

We’ll be using ChatGPT to write React code for us and we can start by making the two checkboxes stateful. We also want to make sure that only one checkbox is selected at a time.

For this, we will ask ChatGPT the following question.

The above query will generate the following response:

As you can see, it has generated the exact code we need with our custom logic requirement.

Since we already have our textarea fields, we can copy the properties from the elements that ChatGPT has created and paste it into our “index.js” file inside the “/pages” directory.

We can also make our textarea & button elements stateful using ChatGPT by running the following query:

This will generate the following code:

Again we can extract the logic that we need and paste it into our “index.js” file inside the “/pages” directory.

Your “index.js” file should look like this after making the changes:

Generating Content with OpenAI API

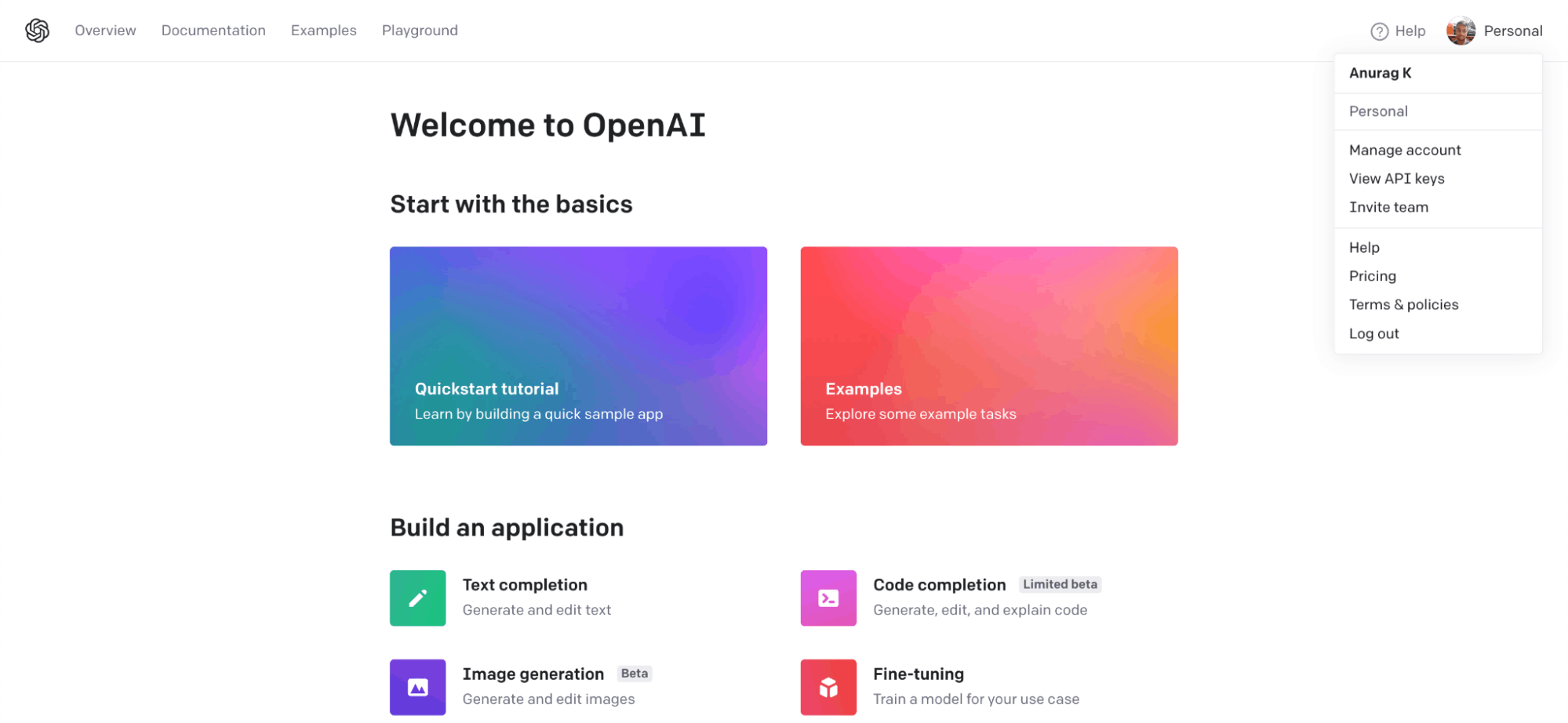

To use the OpenAI API, you would need to create an account by visiting their API homepage.

After creating the account, you see a page similar to the one shown below. Click your profile picture at the top right corner and then click on “View API Keys”.

Now click on “Create new secret key” to create a new API key. Make sure to copy the key as you won’t be able to view it again.

Now create a “.env” file in the root folder of your Next.js project and create a variable named “NEXT_PUBLIC_API_KEY” and assign the API key to it.

We will use ChatGPT again to generate the code snippet for us that will help us integrate the OpenAI API in our app to create social media posts. We would also be using the “text-davinci-003” model for this, as it is the most capable model.

We also want that the API call runs every time the “Generate" button is clicked.

For this, we will query ChatGPT with the following:

This will generate the following code:

As you can see from the code snippet above, we have a function called “handleClick” that runs every time and uses fetch to call the OpenAI API. This function runs every time the button is clicked.

We can replace our “handleClick” function, which we generated in the earlier steps, with this function. However, we would need to make some minor changes such as

- The “Authorization” value should be `Bearer ${process.env.NEXT_PUBLIC_API_KEY}`

- Our “prompt” value in the request body would become `Generate a social media post from: ${text1}`

- Finally, we would update our “text2” value with “setText2()” instead of “setPost()”

Finally since we also want to generate blogs, we can edit our “prompt” based on the user’s selection of checkbox.

After making all the changes, your code should look like this:

That’s it! This is how you can use ChatGPT to extend Locofy-generated code and easily integrate AI functionalities to it via the OpenAI API.

With the Locofy.ai plugin, you will be able to generate pixel-perfect frontend code that can be broken down into components that can accept props and pages, while ChatGPT can help you extend it in record time.

By following the steps above, you can turn your designs into production-ready Next.js code with the ability to generate social media posts and blog pages using AI.

If you want to learn more about how to convert your Figma design to React, React Native, HTML/CSS, Nextjs, Vue, and more, check out our docs. Or explore our solution pages for an in-depth look at how Locofy helps you build apps, websites, portfolio pages and responsive prototypes effortlessly using our low-code platform with seamless AI code generation.